Have you ever wondered how to make your content more engaging and effective? Implement A/B testing, it is a simple yet powerful testing method that can help you fine-tune your content strategy. In this blog, we will explore what A/B testing for content creators is all about and why it is important for anyone looking to boost their content’s performance, and overall success out of it.

What Is A/B Testing?

A/B testing, also known as split testing or bucket testing, is a method used to compare two versions of a webpage, app, or other digital content to determine which one performs better. Essentially, it is an experiment where two or more variants (A and B) are shown to users at random, and statistical analysis is used to determine which variation performs better for a given conversion goal.

A/B testing for content creators involves creating two versions of a web page or app screen: the original (control or A) and a modified version (variation or B). These versions are then shown to different segments of users at the same time.

The users’ interactions with each version are measured and analyzed to see which one performs better in terms of specific metrics like click-through rates, conversions, or user engagement.

For example, if you want to test which headline on your landing page attracts more clicks, you would create two versions of the page with different headlines. Half of your visitors would see the original headline, and the other half would see the new one. By comparing the performance of the two versions, you can determine which headline is more effective.

Benefits of A/B Testing & How It Helps Your Content Performace

A/B testing for content creators offers numerous benefits for businesses and marketers. Here are some of the key advantages:

1. Increased User Engagement

A/B testing for content creators allows you to test different elements of your digital content to see which ones resonate more with your audience. By optimizing these elements, you can increase user engagement, making your content more appealing and interactive.

2. Improved Conversion Rates

By testing different versions of a web page or app, you can identify which version leads to higher conversion rates. This could involve testing different headlines, calls-to-action, or page layouts to see which one encourages more users to take the desired action, such as making a purchase or signing up for a newsletter.

3. Reduced Bounce Rates

A/B testing for content creators helps you understand what keeps users on your site and what makes them leave. By optimizing elements like copy, images, and headlines, you can reduce bounce rates and keep visitors on your site longer.

4. Data-Driven Decision

A/B testing for content creators eliminates guesswork by providing concrete data on what works and what does not. This allows businesses to make informed decisions based on actual user behavior rather than assumptions or intuition.

5. Minimized Risks

Introducing new features or making significant changes to your website can be risky. A/B testing for content creators allows you to test these changes on a smaller scale before fully implementing them, minimizing the risk of negative impacts on user experience or conversion rates.

6. Better Understanding of Target Audience

By analyzing the results of A/B testing for content creators, businesses can gain valuable insights into their target audience’s preferences, behaviors, and needs. This information can be used to tailor future marketing efforts and product developments to better meet the needs of the audience.

7. Higher Return on Investment (ROI)

A/B testing for content creators helps businesses optimize their digital content for maximum effectiveness, leading to higher conversion rates and increased revenue. By making data-driven improvements, businesses can achieve a better return on their marketing investment.

Step-by-Step Guide to Conduct A/B Testing for Content Creators

Whether you are testing blog post titles or video elements, the process involves comparing two versions to see which one yields better results. Let us dive into a step-by-step guide on how to conduct A/B testing for content creators for their blog posts and videos:

1. Define Your Goals

Before you start testing, it is crucial to define what you want to achieve. Are you looking to increase click-through rates, engagement, or conversions? Your goals will guide the entire testing process.

- Primary Goals: These are the main objectives, such as increasing the conversion rate or reducing the bounce rate.

- Secondary Goals: These could include metrics like time spent on the page, click-through rates, or engagement metrics.

For example, if you are testing blog post titles, your primary goal might be to increase the number of clicks on the post. Secondary goals could include the time spent reading the post or the number of shares on social media.

2. Identify the Variable to Test

Choose one element to test at a time to ensure that you can attribute any changes in performance to that specific element. For blog posts, this could be the title, the introduction, or the call-to-action. For videos, you might test the video length, title, thumbnail, or on-screen text.

- Blog Post Titles: Test different variations of your blog post titles to see which one attracts more clicks.

- Video Elements: Test different video lengths, titles, thumbnails, or background music to see which version performs better.

3. Create Variations

Create two versions of the content: the original (A) and the variation (B). Ensure that the only difference between the two versions is the element you are testing.

- Blog Posts: Write two different titles for the same blog post.

- Videos: Create two versions of the same video with different thumbnails or lengths.

4. Split Your Audience

Randomly split your audience into two groups. One group will see version A, and the other will see version B. This ensures that the test results are not biased.

- Blog Posts: Use an A/B testing tool to randomly show different titles to different visitors.

- Videos: Use video platforms or social media tools that support A/B testing to show different versions to different viewers.

5. Run the Test

Run the test for a sufficient amount of time to gather enough data. The duration of the test will depend on your website traffic or video views. Typically, a minimum of two weeks is recommended to account for variations in user behavior.

- Blog Posts: Monitor the performance of each title over a set period.

- Videos: Track metrics like view count, engagement rate, and click-through rate for each video version.

6. Gather And Analyze Data

Collect data on the performance of each version. Use analytics tools to track key metrics and compare the results.

- Blog Posts: Use tools like Google Analytics to track clicks, time on page, and bounce rate.

- Videos: Use video analytics tools to track view counts, engagement rates, and click-through rates.

7. Determine the Winner

Analyze the data to determine which version performed better. Look for statistically significant differences in the metrics you are tracking.

- Blog Posts: Determine which title resulted in more clicks and a longer time on the page.

- Videos: Identify which video version had higher engagement and click-through rates.

8. Implement the Changes

Once you have identified the winning version, implement the changes permanently. Use the insights gained from the test to inform future content creation and optimization.

- Blog Posts: Use the winning title for your blog post and apply similar strategies to future posts.

- Videos: Use the winning video elements in future video content to improve performance.

Best Practices for A/B Testing

While conducting A/B testing for your content and measuring the performances there are some considerations to follow. Here are some best practices to ensure your A/B tests are effective and bring reliable results out of your blog posts and videos:

Test One Element at a Time

When conducting A/B tests, it is crucial to isolate a single variable to understand its impact clearly. For instance, if you are testing a web page, you might want to change only the headline or the call-to-action button, but not both simultaneously.

This approach helps you pinpoint exactly which change led to the observed difference in performance. Testing multiple elements at once can muddy the results, making it difficult to determine which change was responsible for any improvement or decline in performance.

Ensure Statistical Significance

Statistical significance is a key concept in A/B testing. It ensures that the results of your test are not due to random chance but are instead a true reflection of the performance difference between the two versions. To achieve statistical significance, you need a sufficiently large sample size and enough time for the test to run.

Tools like HubSpot’s Significance Calculator or Visual Website Optimizer’s (VWO) statistical significance tool can help you determine if your results are statistically significant. Without statistical significance, you risk making decisions based on unreliable data, which can lead to suboptimal outcomes.

Tools for A/B Testing for Content Creators

Using A/B testing tools or plugins can streamline the process and provide more accurate results. There are some tools available that help to accelerate the whole process of this testing. Here are the most popular tools:

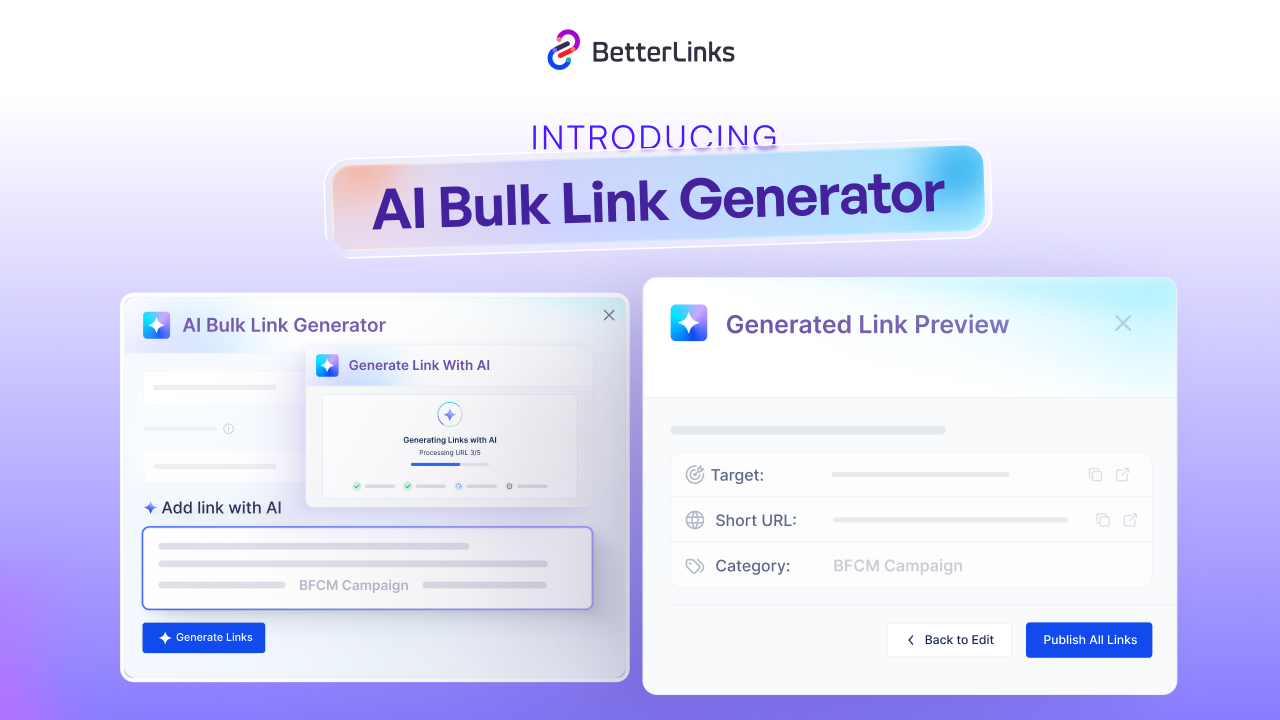

BetterLinks

This tool is great for managing and tracking campaign URLs. It allows you to conduct A/B split tests easily, helping you measure the performance of different marketing campaigns and optimize your strategies accordingly.

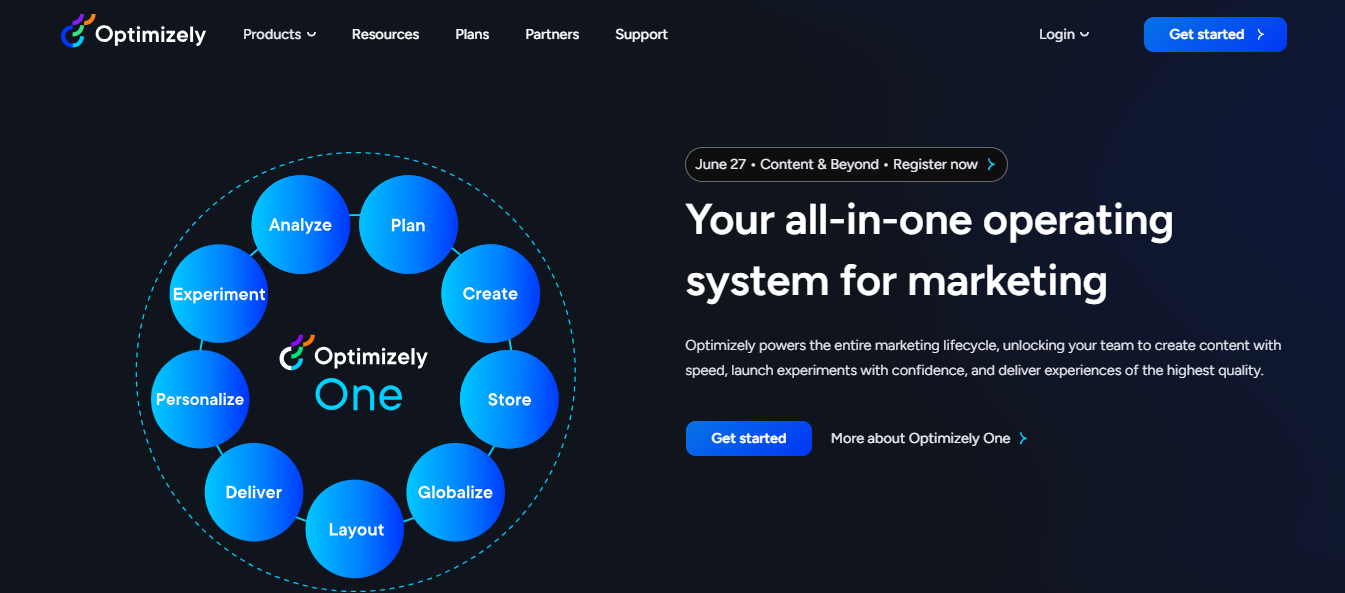

Optimizely

Known for its robust features, Optimizely offers enterprise-grade A/B testing capabilities. It supports web and feature experimentation, content management, and personalization, making it ideal for large businesses with complex testing needs.

Common Mistakes to Avoid for A/B Testing for Content Creators

Testing too many elements simultaneously can cause inaccurate effects. For example, altering the banner, button size, and product info all at once makes it unclear which change improved sales.

Ending tests prematurely can lead to misleading results. For instance, testing only from Monday to Thursday might miss weekend user behavior, skewing the data.

Dismissing inconvenient data can result in missed opportunities and flawed strategies. Ignoring poor performance data of a new feature, for example, prevents understanding and addressing the issue.

We hope you found this blog helpful and If you want to read more exciting blogs, subscribe to our blog page, and join our Facebook community to get along with all WordPress experts.